Open-Source Stereo Video Camera System

September 1, 2023

Tech Stack

Democratizing VR Content Creation Through Open-Source Innovation

As VR headsets become more mainstream, there's a growing need for accessible stereoscopic content creation tools. Most commercial stereo cameras are prohibitively expensive or locked into proprietary ecosystems. This project tackles that challenge head-on by developing a modular, open-source stereo video camera system specifically designed for VR life-logging and content creation.

Project Demonstration

Main Project Overview

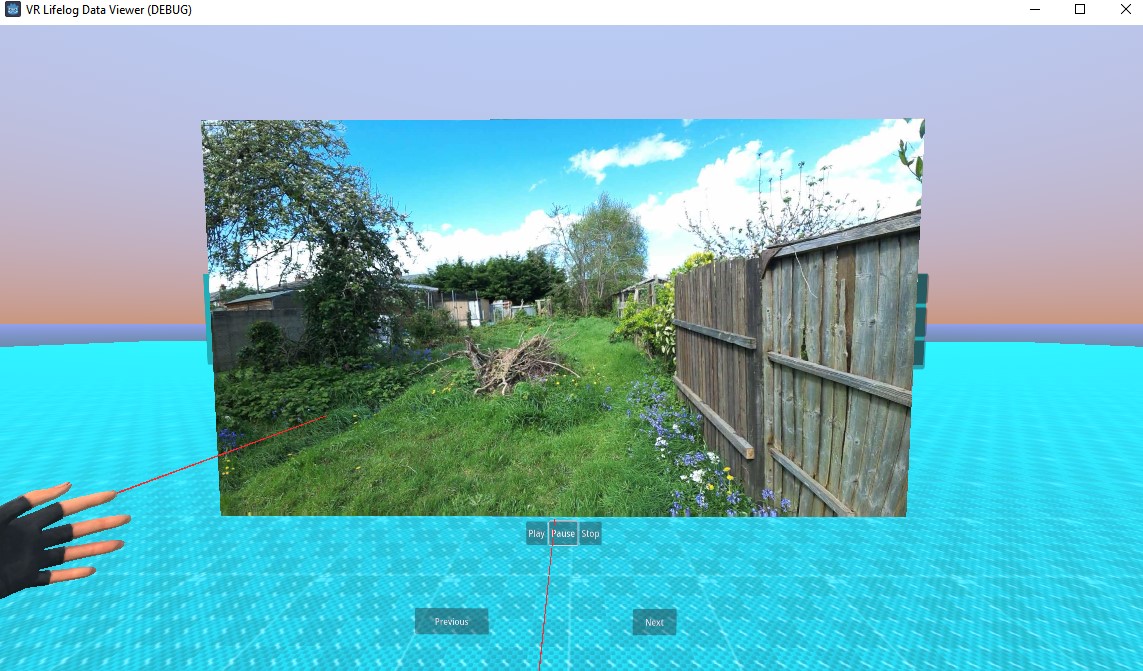

VR Experience Demo

The Problem with Current VR Content

The VR industry faces a significant content gap. While 360-degree videos exist, they often lack the depth perception that makes VR truly compelling. Traditional stereo cameras like the Snapchat Spectacles 3 or Kandao QooCam Ego are either discontinued or cost over £500, putting them out of reach for most creators. Even Apple's spatial video on iPhone 15 Pro, while impressive, requires buying into their expensive ecosystem.

Technical Architecture

Hardware Foundation

Built around the Raspberry Pi 5's dual MIPI CSI camera ports, the system uses two synchronized cameras positioned at the average human inter-pupillary distance (65mm). This mimics natural human vision for authentic depth perception.

Key Hardware Decisions:

- Raspberry Pi 5: Chosen over Pi Pico after discovering SPI bandwidth limitations for high-framerate video

- Dual Camera Setup: Side-by-side configuration for true stereoscopic capture

- Modular Design: Open-source 3D-printable housing for various mounting options

Stereo Processing Pipeline

The captured footage undergoes automated processing using a Python-based pipeline:

Raw Dual Video → FFMPEG Processing → Side-by-Side Format → Scene Classification → VR-Ready Content

- FFMPEG Integration: Handles video synchronization and format conversion

- Scene Detection: IBM's MAX-Scene Classifier API automatically tags content for easy browsing

- Automated Workflow: Scripts handle the entire processing pipeline without manual intervention

VR Software Experience

Built in Godot 4 using the advanced XR toolkit, the VR application provides an intuitive interface for browsing and experiencing captured content:

- Immersive Viewing: Content displayed as virtual windows in 3D space

- Smart Organization: AI-powered scene detection creates automatic categories

- Natural Navigation: Designed specifically for VR interaction patterns

Real-World Performance

The system exceeded initial expectations, achieving:

- Resolution: Beyond 1080p capability (originally targeted 720p)

- Framerate: Smooth 30fps stereoscopic recording

- Processing: Fully automated pipeline from capture to VR-ready content

- Cost: Under £200 total system cost vs £500+ commercial alternatives

Technical Challenges Overcome

Hardware Compatibility Issues

Initial attempts with Raspberry Pi Pico and SPI cameras failed due to bandwidth limitations. The solution required upgrading to Pi 5's dedicated camera ports, which provided the necessary data throughput for dual video streams.

Synchronization Precision

Ensuring frame-perfect synchronization between two cameras required careful timing calibration and FFMPEG processing to eliminate any temporal drift that would cause eye strain in VR.

VR-Specific Optimization

Unlike traditional video, VR content requires specific formatting and metadata. The pipeline automatically handles side-by-side formatting and adds the necessary spatial information for proper VR playback.

Innovation in Accessibility

This project doesn't just recreate existing solutions—it fundamentally reimagines stereoscopic content creation:

- Open Source: Complete hardware designs and software available on GitHub

- Modular: Components can be adapted for different use cases (handheld, wearable, fixed mount)

- Cost-Effective: Demonstrates that professional-quality stereo recording is possible with off-the-shelf components

- VR-First: Designed specifically for VR consumption, not adapted from traditional video

Future Developments

The modular architecture enables exciting possibilities:

- Spatial Audio: Integration of stereo microphones for complete immersive capture

- Eye Tracking: Enhanced interaction within the VR browsing experience

- Containerization: Docker deployment for easier setup and distribution

- Hand Tracking: Natural gesture-based navigation in VR space

Impact on Content Creation

By making stereoscopic recording accessible to makers and content creators, this project addresses VR's fundamental content problem. Personal, authentic 3D content creates the emotional connection that drives VR adoption—something generic 360-degree videos cannot achieve.

The success of this open-source approach demonstrates that innovative VR tools don't need massive corporate backing. With the right technical foundation and community collaboration, individual developers can create solutions that push the entire industry forward.

This project was completed as part of an MEng Electronics Engineering final year project at the University of Southampton, supervised by Dr. Tom Blount. The complete technical documentation and source code are available on GitHub.